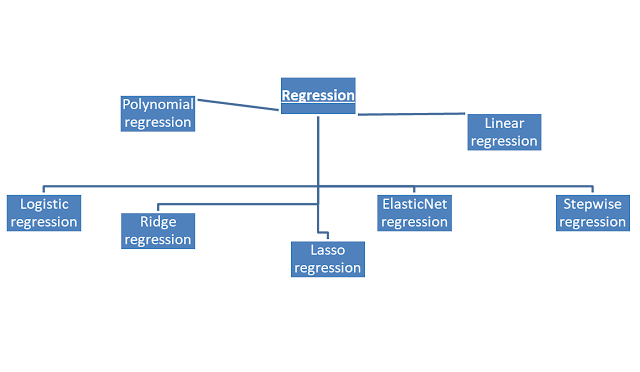

Discuss types of regression | Types of Regression with the help of suitable examples | Regression with the help of suitable examples and diagram

Definition of Regression:

Regression is just a technique we use in data mining to find out the different prediction of the datasets of different surveys we choose. Like use to find out the cost of the specific product etc. it’s in the form of numeric values only

Linear:

For predictive

analysis, it's used. Linear regression is a method for modeling the

relationship between criteria or scalar response and many predictors or

explanatory factors using a linear approach. The conditional probability

distribution of the response given the values of the predictors is the focus of

linear regression. There is a risk of over fitting in linear regression. Y' =

bX + A is the formula for linear regression.

Logistic:

When the

dependent variable is dichotomous, this method is utilized. Logistic regression

is a type of binomial regression that estimates the parameters of a logistic

model. When dealing with data having two alternative criterions and the

relationship between the criterions and the predictors, logistic regression is

used. l = beta 0+beta 1x 1+beta 2x 2 is the equation for logistic regression.

Polynomial:

For curved data, is used. The

least squares method is used to fit polynomial regression. Regression analysis

is used to predict the value of a dependent variable y in relation to an

independent variable x. l = beta 0+beta 0x 1+epsilon is the equation for

polynomial regression.

Stepwise

is

used to fit regression and predictive models together. It is carried out in an

automated manner. The variable is added or deleted from the set of explanatory

variables at each stage. Forward selection, backward elimination, and

bidirectional elimination are three methods for stepwise regression. Stepwise

regression is calculated using the formula b j.std = b j(s x * s y-1).

Ridge:

is a method for assessing data

from multiple regression models. Least squares estimates are unbiased when

multi collinearity occurs. Ridge regression reduces the standard errors by

adding a degree of bias to the regression estimates. Beta = (XTX + lambda *

I)-1XTy is the formula for ridge regression.

Lasso:

is a regression analysis technique that includes variable selection as well as regularization. Soft trees holding is used in Lasso regression. Only a subset of the specified covariates is used in the final model with Lasso regression. N-1sumN i=1f(x i, y I, alpha, beta) is the Lasso regression formula.

Regression examples and diagram :

If we conduct the analysis of any business which is

selling products like vivo phone analysis. And we want to put it the advertainment the 5 year analysis of the vivo phone on phone model and its cost

we pay on the advertisement

Here the table blow for the analysis of vivo phone product and its cost on advertisement:

|

year |

Advertisement |

Sales |

|

Year1 |

50$ |

1150$ |

|

Year2 |

60$ |

2150$ |

|

Year3 |

70$ |

3250$ |

|

Year4 |

80$ |

1530$ |

|

Year5 |

90$ |

5430$ |

0 comments:

Post a Comment